Berkeley Deep Drive-X (eXplanation) is a dataset is composed of over 77 hours of driving within 6,970 videos. The videos are taken in diverse driving conditions, e.g. day/night, highway/city/countryside, summer/winter etc. On average 40 seconds long, each video contains around 3-4 actions, e.g. speeding up, slowing down, turning right etc., all of which are annotated with a description and an explanation. Our dataset contains over 26K activities in over 8.4M frames.

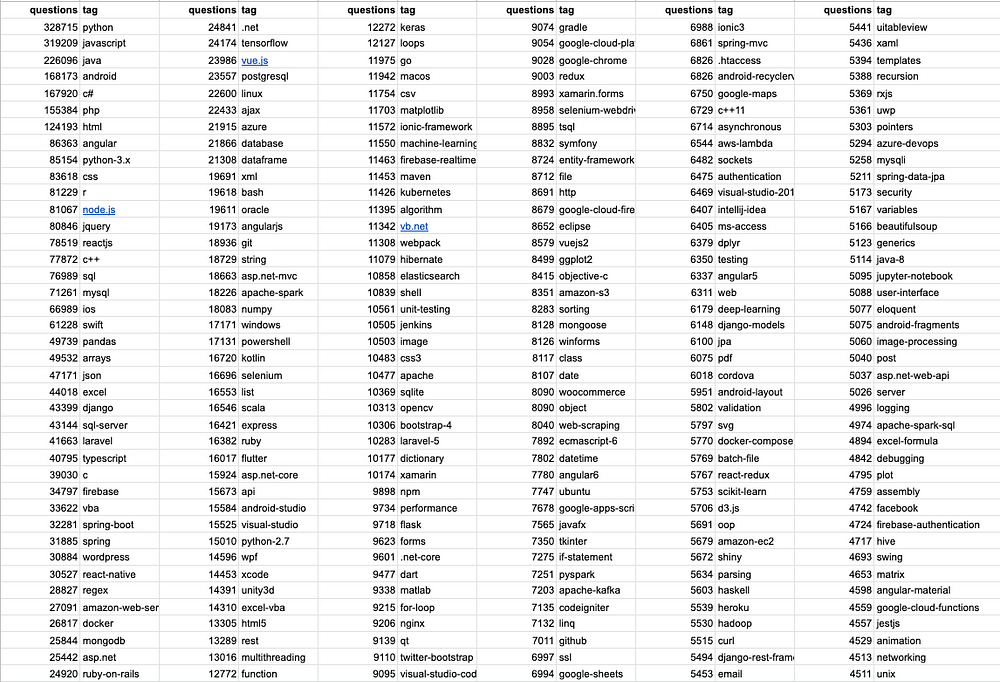

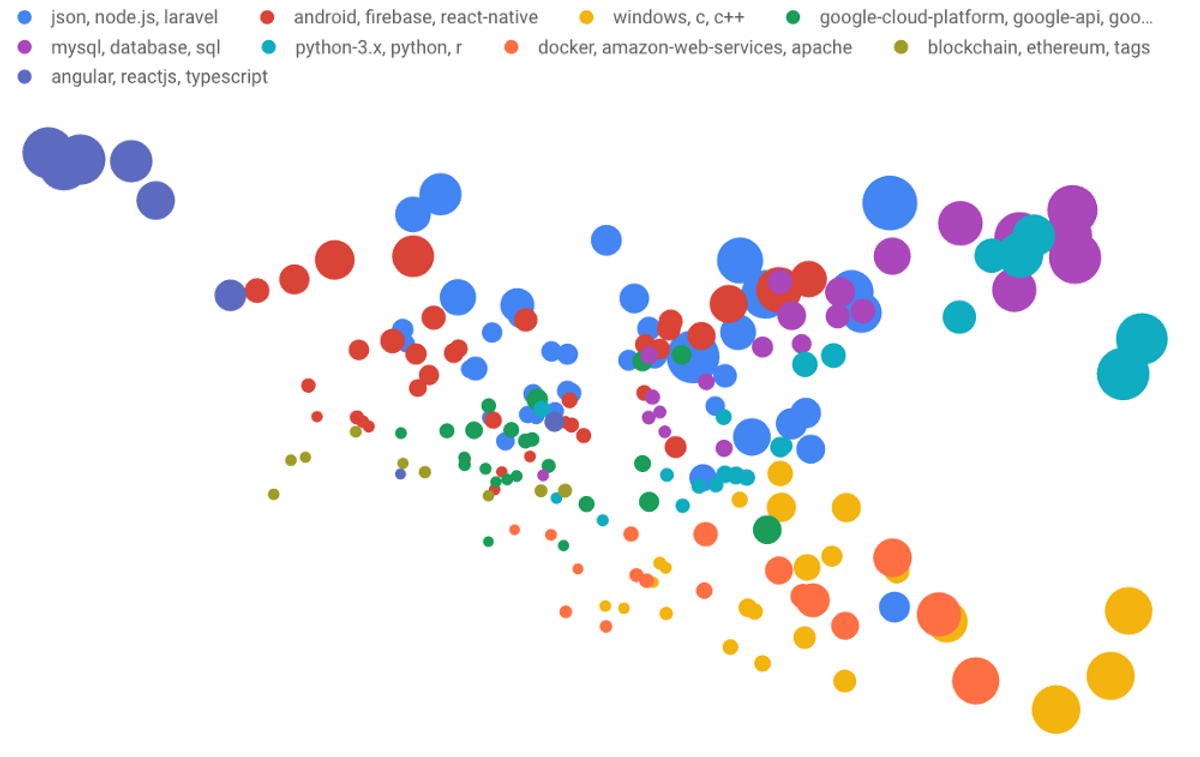

Making Sense of the Metadata: Clustering 4,000 Stack Overflow tags with BigQuery k-means - Stack Overflow

J. Imaging, Free Full-Text

Making Sense of the Metadata: Clustering 4,000 Stack Overflow tags with BigQuery k-means - Stack Overflow

PDF] Local Interpretations for Explainable Natural Language Processing: A Survey

BDD100K Dataset Papers With Code

Sfnet: Faster and Accurate Semantic Segmentation Via Semantic Flow

Exploring the Berkeley Deep Drive Autonomous Vehicle Dataset, by Jimmy Guerrero, Voxel51

An example of our TrafficQA dataset showing that a white sedan had

DriveGPT4: Interpretable End-to-end Autonomous Driving via Large Language Model